Output Pattern and JSON Logs Using Logback in Spring Boot

When operating an API server, it is common to leave logs for operational reasons or debugging.

Traditionally, in Spring, while there is a format for leaving logs, they are often left in Raw Text form, which I think is better for visibility than leaving them in JSON form.

However, the story is different when searching and filtering logs. In platforms like AWS CloudWatch where logs can be searched, logging in Raw Text format makes it difficult to filter which function emitted which data.

It becomes especially challenging when you need to search under multiple combined conditions.

While this issue can generally be resolved by adding specific keywords to the logs and searching with those keywords, leaving logs in JSON format makes formal searches easier. (I’m not sure of the exact implementation, but perhaps setting up parts like indexing could be advantageous.)

To address this, let’s explore how to separate logs into Raw Text for local environments and JSON format for deployment environments to enhance visibility and facilitate searching.

Logback

Logback is an implementation of SLF4J, which is the default logging library used in Spring Boot.

By simply adding the spring-boot-starter-web dependency, Logback is automatically included, eliminating the need for additional dependency inclusion.

Slf4j

Slf4j stands for Simple Logging Facade for Java, and it’s an interface that abstracts Java’s logging libraries.

Since it’s purely an interface, various logging libraries such as Logback, Log4j, Log4j2, JUL can be used.

The rationale behind this setup is likely to facilitate easy switching of implementations in case a vulnerability is discovered in a specific logging library.

Logback Configuration

Logback configuration is primarily set up using a logback-spring.xml file.

This configuration comprises three main components: Appender, Logger, and Encoder.

Appender

Appender determines where the log will be output.

By default, there are various Appenders like ConsoleAppender, FileAppender, RollingFileAppender, and SyslogAppender.

Logger

Logger decides the target to which the logs will be recorded.

Logger possesses a name and only logs attached to that named Logger are recorded.

Encoder

Encoder decides the format in which the log will be output.

By default, with PatternLayoutEncoder, you can log in Raw Text format, and with JsonEncoder, you can log in JSON format.

I will guide you on how to use PatternLayoutEncoder for local environments and JsonLayout for deployment environments.

JsonLayout

JsonLayout is a Layout provided by Logback that enables logs to be output in JSON format.

To use JsonLayout, logback-json-classic and logback-jackson dependencies need to be added.

dependencies {

implementation 'ch.qos.logback.contrib:logback-json-classic:0.1.5'

implementation 'ch.qos.logback.contrib:logback-jackson:0.1.5'

implementation 'com.fasterxml.jackson.core:jackson-databind:2.15.2'

}logback-spring.xml

Next, create a logback-spring.xml file under the resources directory and configure it as follows.

Appender Configuration

First, configure the Appender that will output logs in JSON format.

The dependencies we have received will be utilized in the layout part.

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="30 seconds">

<appender class="ch.qos.logback.core.ConsoleAppender" name="CONSOLE_JSON">

<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">

<layout class="ch.qos.logback.contrib.json.classic.JsonLayout">

<appendLineSeparator>true</appendLineSeparator>

<jsonFormatter class="ch.qos.logback.contrib.jackson.JacksonJsonFormatter"/>

<timestampFormat>yyyy-MM-dd'T'HH:mm:ss.SSS'Z'</timestampFormat>

<timestampFormatTimezoneId>Etc/Utc</timestampFormatTimezoneId>

</layout>

</encoder>

</appender>

<appender class="ch.qos.logback.core.ConsoleAppender" name="CONSOLE_STDOUT">

<encoder>

<pattern>[%thread] %highlight([%-5level]) %cyan(%logger{15}) - %msg%n</pattern>

</encoder>

</appender>

<!-- Excerpt omitted -->

</configuration>Only the appender has been configured so far; the part for actual log usage hasn’t been set up yet.

The pattern is configured concisely to only print the Logger and Message.

CONSOLE_JSON Appender

CONSOLE_JSON Appender is configured to output logs in JSON format.

Further configurations are as follows:

timestampFormat: Specifies the date format. (I set it to RFC3339 format.)timestampFormatTimezoneIdspecifies the timezone.

CONSOLE_STDOUT Appender

CONSOLE_STDOUT Appender is configured to output logs in Raw Text format.

Logger Configuration

Now, configure which Appender to use based on the profile.

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="30 seconds">

<!-- Excerpt omitted -->

<springProfile name="qa, dev">

<root level="INFO">

<appender-ref ref="CONSOLE_JSON"/>

</root>

</springProfile>

<springProfile name="local">

<root level="INFO">

<appender-ref ref="CONSOLE_STDOUT"/>

</root>

</springProfile>

</configuration>In this setting, Appenders are configured based on profiles using the springProfile tag.

qa,devprofiles are set to use theCONSOLE_JSONAppender.localprofile is set to use theCONSOLE_STDOUTAppender.

Example Code

package com.example.demo.product.service;

import com.example.demo.product.domain.dto.ProductDto;

import com.example.demo.product.repository.ProductRepository;

import com.example.demo.product.util.EventNumberPicker;

import java.util.List;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Service;

@Slf4j

@Service

@RequiredArgsConstructor

public class ProductService {

private final ProductRepository productRepository;

public List<ProductDto> listProducts() {

log.info("Hello World");

return productRepository

.findAll()

.stream()

.map(product -> new ProductDto(product, EventNumberPicker.pick(1, 1000)))

.toList();

}

}Result

By dividing logging like this by environment, local environments can retain visibility while also enabling more convenient searching in deployment environments.

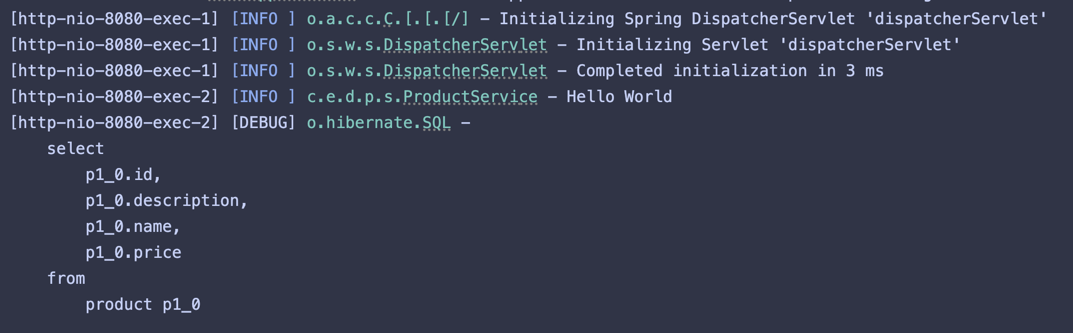

CONSOLE_STDOUT

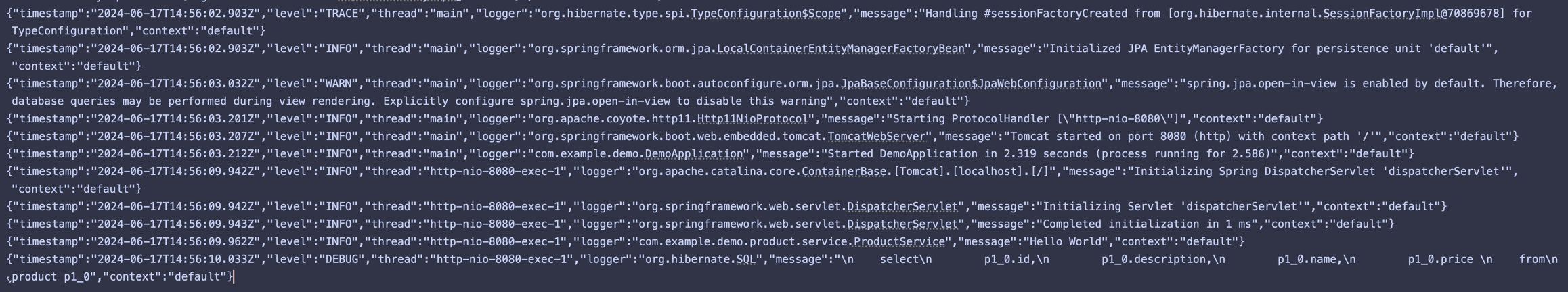

CONSOLE_JSON

Although I personally find it messy, searching will become more convenient.